Columns vs Rows - A deep dive into Data and Optimisation.

The Data Analytics space has been abuzz with content about columnar data for the last couple of years, with DuckDB shaking up the OLAP (OnLine Analytical Processing) and database scene, Apache Parquet files being supported by data warehouses like Google’s BigQuery, and Apache Arrow being used in ELT tools like CloudQuery.

But when is it actually appropriate? Do you actually benefit from pivoting your data? Should you use columnar data storage by default?

This actually goes beyond the simplistic representation provided by most introductory articles.

The reality is that in modern databases and data architectures, the simplistic argument doesn’t actually hold true.

But before I get into it, let’s first restate the description of columnar and tabular data.

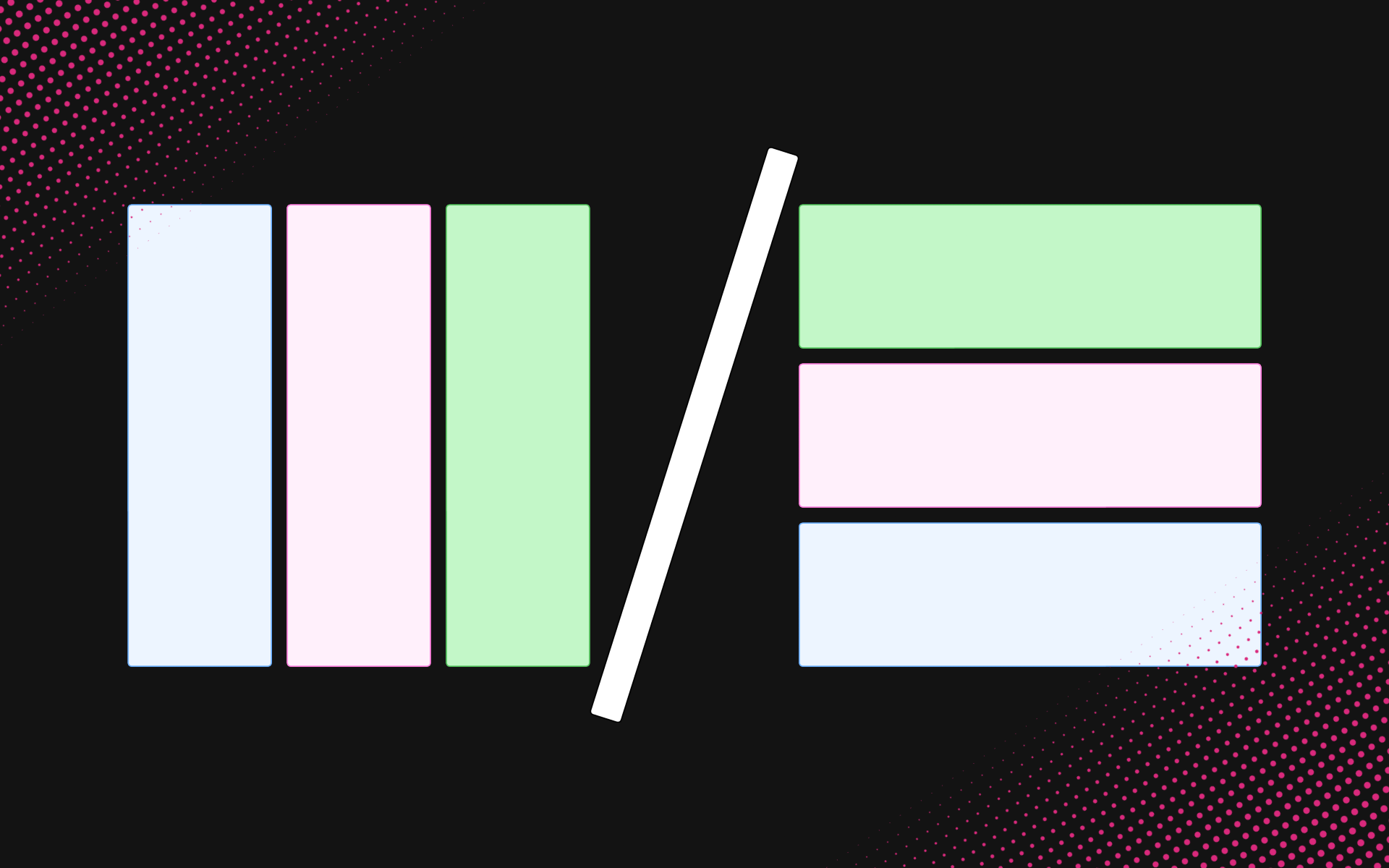

Columns vs Rows

Essentially, the primary difference is as simple as it sounds. Columnar databases and formats store data in columns, and tabular databases and formats store data in rows.

The most simplistic example of this is a CSV (Comma-Separated Values) file:

reg_plate,wheels,doors,engine_size,fuel_type

ABC12DEF,4,5,2.8,diesel

BCD23EFG,4,2,3.0,petrol

CDE34FGH,4,4,2.0,petrolVs a CSV file where I’ve pivoted the data:

reg_plate,ABC12DEF,BCD23EFG,CDE34FGH

wheels,4,4,4

doors,5,2,4

engine_size,2.8,3.0,2.0

fuel_type,diesel,petrol,petrolYou could argue that this is a truly separate format and call it a CCSV (Columnar CSV) if you really wanted, as most CSV parsers would have a hard time reading it. But that felt a little disingenuous to me.

So what are the benefits?

Reading line-by-line, as computers do, in the Traditional CSV format, you get one singular object. Making it quick and easy to access all the information about that object.

Whereas reading line-by-line in the Columnar CSV format, you get all of the data for one type of information. Making it quick and easy to analyse differences in that specific type of information.

But to get to the bottom of this, we need to go a bit deeper.

Tabular data - The computer science

Traditionally, in computer science, we think of data in terms of Object-Relational Models (ORMs), where you have some class or struct that represents some object, which is then stored in a row of a table. In a typical beginner’s tutorial, you might see something like:

@dataclass

class Car:

reg_plate: str

wheels: int = field(default=4)

doors: int = field(default=5)

engine_size: float = field(default=2.0)

fuel_type: FuelType = field(default=Diesel)Which would map to a table that looks something like:

| primary_key (INT) | reg_plate (VARCHAR[8]) | wheels (INT) | doors (INT) | engine_size (REAL) | fuel_type (VARCHAR[16]) |

|---|---|---|---|---|---|

| 1 | ABC12DEF | 4 | 5 | 2.8 | diesel |

| 2 | BCD23EFG | 4 | 2 | 3.0 | petrol |

| 3 | CDE34FGH | 4 | 4 | 2.0 | petrol |

But let’s simplify this.

Imagine you didn’t have a database like PostgreSQL, how might you do that?

Well, the most memory-efficient approach would be an Array of these objects.

But that has three main downsides:

- (Re)allocation - adding more items than the initially allocated space requires reallocation, which requires copying the old data to a new space in memory - this will fail if you don’t have a sufficient contiguous block of memory available. - Complexity: O(N)

- Inserts - inserting an item in any position other than at the end of the list requires moving all items that would follow it. - Complexity: O(N)

- Deletes - similar to inserting, removing an item anywhere other than the last position requires moving all items that follow into the vacancy of the item preceding it. - Complexity: O(N)

The upsides:

- Reads - both sequential and random reads are instant. - Complexity: O(1)*

- Binary search - binary/bisecting search using the primary key is as fast as computationally possible. - Complexity: O(LogN)

* When we’re talking about the degree of optimisation that we’re going into in this article, it’s important to note that not all O(1) complexity algorithms are created equal. But we’ll get into this more later.

What about Linked Lists?

Do they have a purpose outside of coding interviews and university? Yes.

Linked Lists actually solve all three issues of using arrays, and don’t significantly affect sequential reads:

- Reallocation - doesn’t exist, each new object takes up the first available place in memory that’s big enough to hold it. - Complexity: N/A

- Inserts - adding a new item anywhere in the list only requires modifying the next and previous markers of the adjacent objects. - Complexity: O(1)

- Deletes - removing an item anywhere in the array only requires modifying the next and previous markers of the adjacent objects. - Complexity: O(1)

However, it’s a double-edged sword:

- Random reads - random reads now require traversing all items that precede the item that we are looking for. - Complexity: O(N)

- Binary searches - binary/bisecting search using the primary key is significantly slowed, as you now need to traverse each item while moving a cursor. - Complexity: O(NLogN)

But here’s the thing: When searching the data using anything other than the primary key, we need to traverse all objects sequentially anyway, so the costs of linked lists are somewhat minimised**.

** As mentioned in the footnotes of the section on Arrays, while sequential reads in linked lists are also technically O(1) complexity, that doesn’t actually mean they have identical performance, but again, more on this later.*

Columnar data - The computer science

Ok, so what is a columnar data format?

Well, as the name suggests, this is data that is structured in columns rather than rows.

+In simple terms, this could be represented by having a collection for each parameter of our original object.

car__reg_plate: List[str] = []

car__wheels: List[int] = []

car__doors: List[int] = []

car__engine_size: List[float] = []

car__fuel_type: List[FuelType] = []At first blush, this doesn’t appear to have brought us any significant benefit, and has added additional work to any time we want to provide a result, as we now have to create the response object rather than just retrieve it, and depending on the type of collection used (Linked Lists or Arrays), we have the same performance properties.

But that’s not 100% true; it’s a convenient lie told to us by Big-O notation and algorithmic complexity theory.

The problems with Big-O

In reality, CPUs don’t have instant access to everything stored in RAM (or worse, an SSD; or even worse, an HDD). CPUs operate on caches of that data, stored in ultrafast on-chip memory, which is usually tiered into three layers:

- L1 - This is the fastest and most expensive; it is directly attached to each core of the CPU, and is typically only a few kilobytes to a couple of megabytes long. Usually, this is split into two sections: L1I - for instructions, and L1D - for the data.

- L2 - This is the middle ground; depending on the specific chip architecture, it typically spans a pair of cores and is a few hundred kilobytes to a few megabytes long.

- L3 - This is historically the uppermost layer, spanning the whole chip, and is commonly measured in tens to hundreds of megabytes in modern systems.

- L4 - On chiplet-based CPU architectures, a fourth layer is commonly introduced, which spans multiple chiplets.

So, how do these caches affect the performance of different data structures?

This is where the differences between O(1) algorithms come to light.

Let’s again consider a simple array:

When iterating over an array, the CPU would pre-fetch as much of the array as will fit into the highest level of cache. It then copies as much of that data as will fit into the lower layers of cache. The CPU then iterates over each element of the data in the L1 cache, swapping pages (chunks of cache lines) of data in and out as needed.

In the case of a linked list, while modern Operating Systems, Compilers, and CPU microcode are all highly optimised to make it as efficient as possible, they still need to iterate over the data in RAM just to load it into the cache. Requiring the CPU to swap multiple pages of data from the RAM in and out of the highest layer, effectively removing the benefit of the highest cache layer entirely.

This drastically impacts performance, as fetching data from RAM is considerably slower than fetching data from the L3 cache (potentially up to 300x slower, depending on CPU and RAM clock speeds and bandwidth).

Meaning, even a sequential read from a linked list at scale is considerably slower than that of an array.

But what does all this have to do with columnar data?

Well, the standard argument goes like this: If you’re iterating over entire objects to check a singular value, then the rest of that object is wasted cache space.

With columnar data, you just load the specific column into the cache, vastly improving performance when working with big data and wide tables.

This can be further optimised by widening the columns slightly to make them into tuples, appending the item’s index to each item, thereby enabling the entire column to be sorted and allowing the use of a binary search on any column.

That’s great, right? By pivoting how we store the data, we can maximise cache use.

The performance improvements this promises are massive!…

But here’s the thing: that’s also all theory.

Tabular data - The SeQueL

The reality is that this optimisation has been known and exploited by tabular databases (like PostgreSQL, Oracle, MySQL, etc.) and data architects for decades.

If your data is structured correctly, with appropriate indices configured, you won’t actually see any significant performance improvement by embedding DuckDB into your pipeline.

In fact, because of the need to clone the data across the network and then transform it from your tabular data structures into columns, it can actually be slower.

I’ll elaborate.

Without diving into the differences of 3rd Normal Form (3NF) and Star Schemas, which can be used to further enhance the performance of a tabular database, and is the subject of an entirely separate post I’m working on, let’s just consider what a run-of-the-mill index is within PostgreSQL, and similar databases.

An index is, essentially, a sorted array that contains a tuple of the key’s value and the index within the table where it can be found.

In very simplified terms, equivalent Python code might look like:

@dataclass

class Car:

reg_plate: str

wheels: int = field(default=4)

doors: int = field(default=5)

engine_size: float = field(default=2.0)

fuel_type: FuelType = field(default=Diesel)

CARS: List[Car] = []

CARS__reg_plate: List[Tuple[str, int]] = []In this example, we would sort the CARS__reg_plate array by the zeroth item of each tuple, enabling us to do a binary search through the registration plates to find a specific plate within O(LogN) time, while making maximal use of the CPU’s caching architecture.

Seem familiar? An index IS a columnar data format, and always has been.

The major difference is that in a columnar database, like DuckDB, it’s all columns, which introduces its own set of problems, but for the most part enables Data Architects to be lazy and get the benefits of binary searches without having to plan out and maintain a detailed Star Schema.

I can, and will, prove the performance comparison in the future with a follow-up, once I’ve had the time to construct a suitable performance test that showcases both pure Python examples and real-world comparisons using DuckDB vs. PostgreSQL.

But in the meantime, I hope I’ve provided some insight into the differences and similarities of tabular vs columnar data storage.

For more content like this, check out my series on building a NoSQL DB from scratch using LMDB.